Due to the rigorous nature of InTime's approach, we'd often get comments like, "You mean this takes 100 compilations more than usual?!" An evaluation customer made an interesting comment recently. This time it was, "I need to evaluate InTime more as it met timing too quickly." It felt like a compliment hidden within a complaint, or vice versa! We're just happy that they put in the time and effort to evaluate InTime and it gave them good results.

I actually think that the two pieces of feedback mean the same thing -- that there is a lack of understanding the software, not so much in terms of how it works, but in realizing how much potential this approach has. As engineers and innovators, we take a lot of pride in creation. Most of the time, competent FPGA designers come with a whole bag of techniques and tricks in their repertoire. They can solve most problems using their understanding of the RTL and architecture.

Except for settings...

As one partner puts it "We felt like we'd lost if we started working with the settings (of the FPGA tools)." It was almost as if any approach not involving RTL changes bordered on cheating. It is not cheating! Like it or not, you are already using default values for the settings in every design whether you realize it or not; it's just that when your design doesn't meet timing or doesn't fit into the target device, the defaults have not helped. The question to ask then, is how much can the settings help in your particular design?

Can InTime's approach really solve your problems?

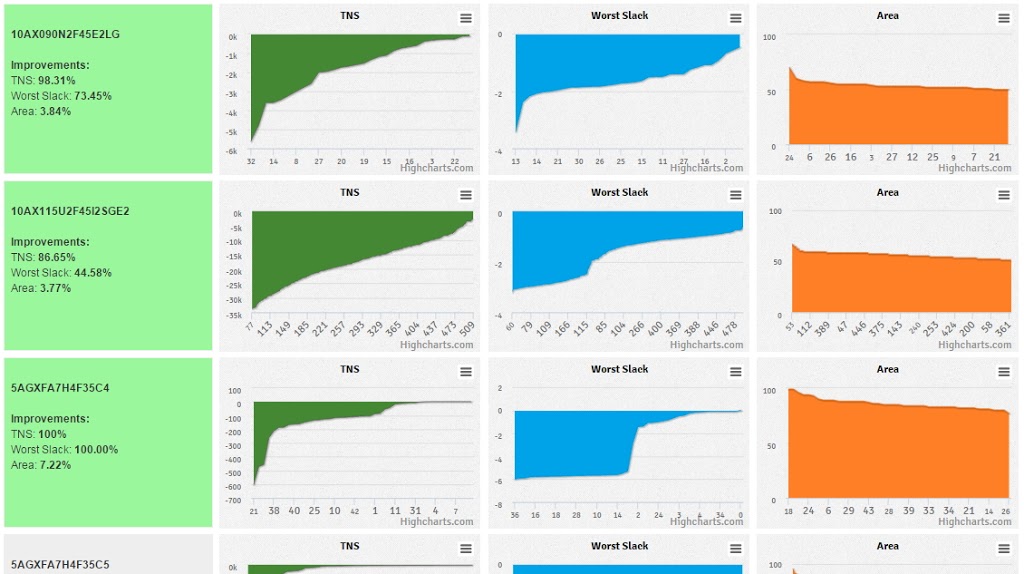

It is true that using the optimal group of settings might not enable you to hit that performance target. However, it is undeniable that using the right ones right from the start will get you more quickly to a better result. Let us look at various customer designs and their results using InTime below:

The full details can be found at this URL.

The various charts show three different metrics, TNS, WS(Worst Slack) and Area, across various customer designs. They are color-coded to differentiate between customers. We highlight the left-most cell in green if there is at least 80% improvement over the original score, whether it is TNS, WS or Area. 100% improvement means we have met timing. Grey indicates less than 80% improvement and red is less than 50% improvement. N.A means that the original timing scores are not captured in the dataset.Here is what was observed for successful cases:

1. Planning and Coding Guidelines

If you plan carefully and practice FPGA-optimized coding guidelines, it is easy to get InTime to produce the results. (One of the cases in red is due to asynchronous design issues. The other did too few compilation runs.) Xilinx has published detailed coding guidelines to help in this area.

2. Starting Timing Scores

This might seem obvious but a higher initial TNS score makes it harder to close timing than a lower one! From our data, the average cutoff TNS is -6000ns. Anything below -6000ns is possible in general. So far InTime has not closed timing on designs with initial TNS values that are worse than -6000ns. If your design is still beyond this threshold, for example, at an earlier stage of the design flow, InTime is still helpful because it points out significant critical paths that you should tackle first. For the record, the "worst" TNS we've encountered was a whopping -60,000ns!

Although we don't have sufficient data to justify some observations, there are some other interesting snippets of note. For instance, a bit of floorplanning seems helpful -- in general, a post-floorplanned design converges faster (fewer number of compilations required, more successful results). Also, there are definitely correlations between using older and newer FPGA tool versions.

One last thing to mention here: The charts and analytics are made possible by a collective crowd-sourced effort from our customers and partners. By sharing InTime data with us, they help further improve our analytics. If you are planning to evaluate InTime in future, please help by sharing with us your InTime data.

Let us know if you have any questions. Thanks for reading!