Solving timing problems via software tools has always been the exclusive domain of the FPGA vendors. As designers, we are conditioned to run timing analysis, examine timing reports and then close timing by changing RTL and constraints. Occasionally, (favorite tip received so far), I'm told to update the FPGA software to the newest version or patch.

When faced with a 3rd-party timing closure tool like our InTime software, many designers, beginners and veterans alike, have many questions and probably express a healthy dose of skepticism.

This post hopes to answer some of them. Please feel free to ping us if you have more.

First, a bit of background:

InTime was created to tackle timing closure based on 2 conditions

- You want to avoid touching the RTL. Code is frozen. Heaven forbid that new bugs and functionality issues be introduced! Or you could be part of the verification team and don't have the rights to modify anything.

- The FPGA tools are leaving a lot of quality on the table. A multitude of synthesis and place-and-route options are available but most of the time, we only exercise a small percentage.

Tweak it to the max

Many customers say that they don't tweak tool settings unless they need to. Usually they would have made edits upon edits to the RTL without avail -- timing issues persist. At that point, people become compelled to try different tool settings, starting with common ones like placement seeds.

Explorers to the rescue

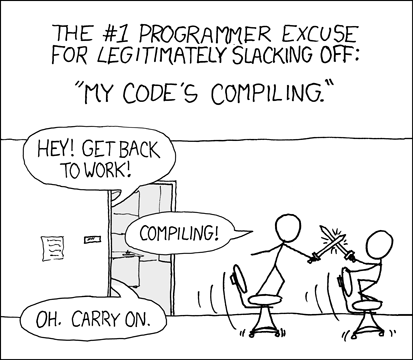

|

| Credits: XKCD - Compiling |

Naturally our FPGA vendors understand the difficulties of choosing tool settings and came to the rescue with "XXX Explorer" software. These software tools provide canned setting combinations or strategies which have been handpicked by the vendors.

A major trade-off of this approach is runtime. As the XXX Explorer iterates through multiple combinations, you simply wait for it to compile all the combinations in sequence, or spend time to set up a Linux cluster to run them in parallel. (These tools do not support Windows clusters.)

More importantly, the main issue is that the combinations are fixed per device family, regardless of the design. This doesn't make sense as every design is unique, with its own characteristics, resource utilization and timing requirements. If such a thing as a single killer setting combination exists, then life will be much simpler!

Come on ... FPGA tool settings... Are they really useful?

The amount of skepticism about the effectiveness of tool settings on timing problems is no laughing matter.

Traditionally, we have been taught to modify RTL and constraints - "Fix the root of the problem", they say. "Using tool settings might mask real issues." We agree and encourage you to do that if your timing problems can be easily resolved.

But let me remind you that you aren't avoiding tool settings at all. When we compile without explicitly specifying options, we are simply using the default settings of the FPGA tools. Default values can be "Auto", which may mean "On", "Normal Compilation", or some numerical value like "1.0". To take this thought one step further, you are really carving your RTL to satisfy this set of default settings.

Quest for the Holy Grail of settings

InTime believes that there is an optimal combination of settings for your design. With more than 70 different settings and a humongous search space of 70 factorial (that's 100 zeros), it's a challenge to find the optimal settings.

InTime derives the optimal combination of settings by using Machine Learning to understand the correlations between your design, the settings, the device and sometimes, your FPGA tool version.

This is enabled by running multiple compilations of your design in parallel and in different stages. With the results, we analyze the data, learn from them and save them into your database. This means that the next time you run the same design, InTime already knows which settings are good for that design.

The best combination or "strategy" as we call them, is unique to each design. Each compilation becomes a data point in the InTime machine learning process so even bad results matter.

Randomly planting seeds

One other important thing to understand about settings is that some are seemingly random -- for example, placement seed. Many users tried placement seeds, failed to find an answer and bemoaned the ineffectiveness of seeds. Or, on lucky occasions, met timing and hailed the discovery of a "miracle seed" value.

The right way to use seeds is to see it as a probability. Between 1 to 100 seed values, you will notice a variance in the results. This variance determines the probability of meeting timing. For instance, if your original Total Negative Slack (TNS) is -1,000 and your variance is 100, then seeds are a complete waste of time.

The InTime way is to lower your TNS to -100 and then try seeds. This way you have a good chance of meeting timing.

Fortunately or unfortunately for some, some FPGA software tools have gotten rid of seeds. But the idea is still valid when dealing with a random setting.

So... can InTime close timing?

The quality of the results depends on how much compilation data is generated for the machine learning process. Run time also factors in. If you amass a total of 10 hours' versus 100 hours' worth of builds, the latter will yield better QoR.

We have seen customers close timing on designs, with TNS ranging from 0.5ns to as high as 500ns! A lower TNS does not mean it is easier to meet timing. Conversely, we've also encountered designs with very low TNS that really struggle to meet timing.

In the worst possible case where InTime still can't meet timing, it still provides critical path reports that ranks the severity of the paths so that you can prioritize your debug efforts. Or you can just pick the one with the best timing and work on it.

For me, the nicest thing about InTime is that you can solve problems without touching code. When we are pressed for time to deliver, our focus is often on resolving all the bugs and issues and getting it ready to be verified. Make the FPGA tools work harder for you, and save precious time.